Hot search

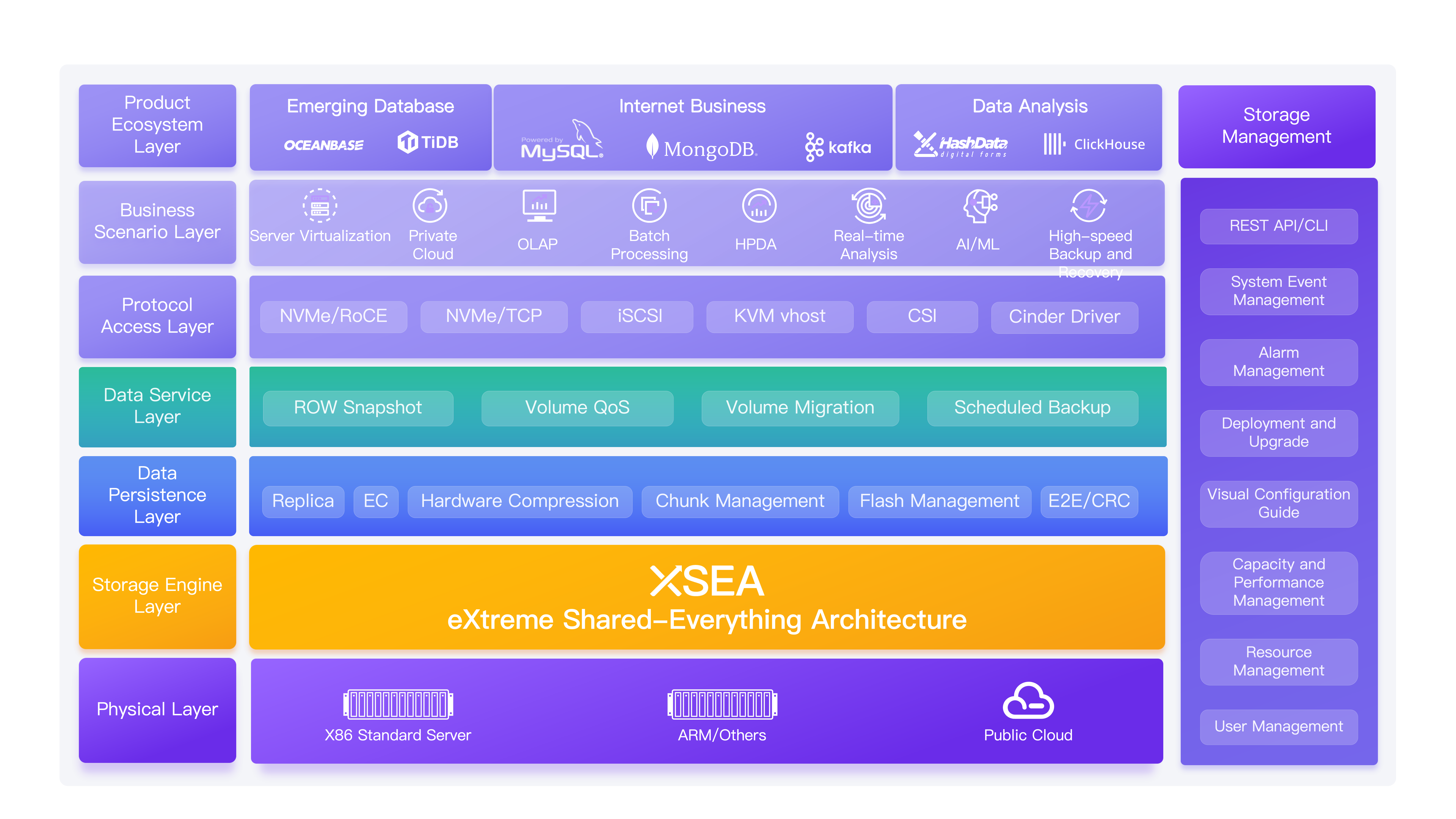

XEDP® Unified Data Platform

XEOS® Object Storage

天合翔宇®

En

- 中国大陆/中文

- Global/English

Inquries

-

ProductsPrimary StorageUnstructured StorageConverged ComputingPrimary StorageUnstructured Storage

-

Technology

- About